A Tentacular Release

We are taking off the covers on what is the first enterprise-grade release of the Ceph Hammer codebase today — Red Hat Ceph Storage 1.3, my first Red Hat product.

The Ceph Engineering team and all the supporting R&D functions ranging from QA to Documentation performed like clockwork, allowing us to hit an ambitious schedule on the head and announce today at the Red Hat Summit with simultaneous immediate availability. Now, that’s how it is done!

What’s new? #

Advancing to Hammer from the upstream Firefly release that has carried all Ceph production customers to date has a significant impact across the board. The change brings many improvements in many areas, too many of them and often too technical to detail, but in the aggregate moving the state of the art forward — just like upgrading the Linux kernel would. The aggregated end result is that the system as a whole performs better and more smoothly, something that one should expect of every Ceph major release, just like we already do with the kernel’s — even when we, as end users, do not necessarily understand all the gory details of what is happening underneath.

I will detail feature-level changes in a future post, but in broad strokes the 1.3 release has three main themes.

The Petabyte Release #

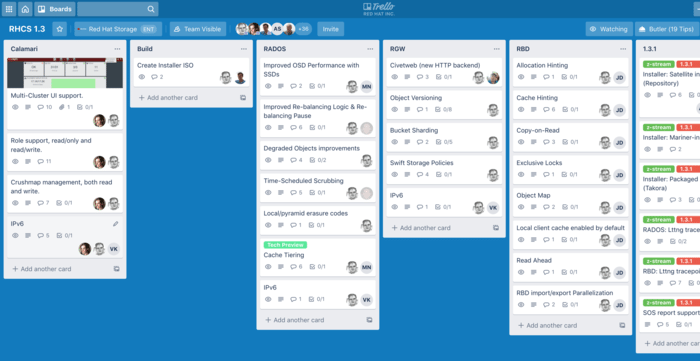

RHCS 1.3 has three main themes, spanning robustness at scale, performance improvements, and operational efficiency.

Ceph does lots for the admin by default. We have continued to improve the internal logic of algorithms inside Ceph, which means it does the right thing for users with large multi-petabyte clusters where ‘failure is normal’. The results are best captured by CERN’s Dan van der Ster’s comments to OpenStack Superuser earlier this week:

“For less than 10-petabyte clusters, Ceph just works, you don’t have to do much” — Dan van der Ster (CERN) #

A good example of the robustness tuning you can see in 1.3 is a contribution of another member of the Ceph community: Yahoo. Yahoo engineering elected to adopt Ceph at massive scale for object storage, initially backing Flickr and with plans to expand to Yahoo Mail and Tumblr. With such demanding use cases, the Yahoo Engineering team voiced concerns around Ceph Object Gateway (RGW) buckets being represented as a single RADOS object potentially posing a risk: that single bucket object could become a hotspot, a performance bottleneck — at Yahoo scale. In true Open Source spirit, they stepped up and contributed bucket sharding to the Ceph codebase: it has now become possible to split the bucket into shards, hosted on different nodes of the RADOS cluster.

Another good example of enterprise-grade robustness are the improvements found in the automatic rebalancing logic, which now prioritizes degraded over displaced objects. By the way, you can now also manually pause rebalancing if it is affecting cluster performance, and you can define a maintenance window for scrubbing so that it falls outside of business hours and does not add to your peak load.

A Stellar Performance #

Performance improves with each release of Ceph, and 1.3 is no exception. A number of changes were made which improve both Ceph’s topline speed but also increase the consistency of IO in certain use-cases.

An example of the first class of enhancements, read ahead tuning loads the Linux kernel in a single cluster IO operation, significantly increasing virtual machine boot speed. SanDisk’s announcement of the InfiniFlash storage array earlier this year brought Ceph to new peak performance heights. A JBOF (“just a bunch of flash”), the InfiniFlash system delivers a high-performance Ceph cluster that shines thanks to codebase improvements that removed bottlenecks exposed by all-flash performance — with some impressive results already floating in the Twittersphere.

With the aim of increasing performance consistency, new features like allocation hinting and cache hinting reduce performance degradation under different circumstances. Allocation hinting helps prevent fragmentation of the underlying XFS storage, while cache hinting reduces unnecessary cache evictions when operations are known to be one-time, as in the case of a backup sweep.

Operational Efficiency #

The third focus of this release is around making the admin’s life easier by reducing the time it takes to do things, and automating away repetitive operations whenever possible with management tooling.

On the monitoring side, the Calamari management system has added CRUSH map management to its REST API, and now supports multi-user access (including read-only user access) and the UI is now multi-cluster capable. At a lower level, faster RBD operations on whole images enable quicker resize, delete, and flattening while RBD export parallelization enables faster backups.

On the installation side, we greatly improved the setup experience of the component formally known as the Ceph Object Gateway. Introducing the embedded webserver Civetweb has simplified the configuration of RGW to the point it is now a shell one-liner:

ceph-deploy install --rgw <node-name> && ceph-deploy rgw create <node-name>

Additional features include full support for IPv6 networking, the introduction of Local and Pyramid erasure codes optionally trading more storage overhead for less bandwidth-intensive recovery operations, as well as the arrival of eagerly awaited support for S3-compatible object versioning alongside Swift’s storage policies in the compatibility column.

Standing on the Shoulders of a Great Community #

As I write in-between sessions at the Red Hat Summit, Bloomberg’s Head of Compute Architecture Justin Erenkrantz gave me the best note to end this post with in his Summit session.

“Ceph has earned our trust“ — Justin Erenkrantz (Bloomberg) #

With a great, passionate Community, Ceph is rapidly becoming the benchmark storage systems are measured against. As the rise of Software-Defined Storage is made inevitable by the rain of bits falling on every CIO, this is really an exciting space for all of us to work together on the future of storage!

Comments? Discuss on Hacker News.