Crossing the Pacific

The Pacific Ocean spans a quarter of the Earth’s surface. While our ambitions for Red Hat Ceph Storage 5 are slightly smaller, it is only just so. Releasing RHCS 5 today on Mary Shelley’s day is a fitting tribute to the amazing creative effort of the more than 195 people who worked on the product directly — and the many, many others who did so indirectly as part of the largest Open Source Community any enterprise storage product enjoys.

UX at the Shell: Cephadm #

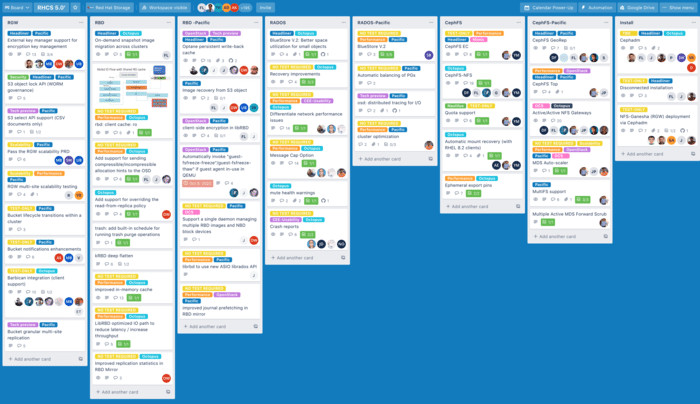

RHCS 5 maintains compatibility with existing Ceph installations while enhancing Ceph’s overall manageability. Further extending the management user interface introduced last year to provide operators with better oversight of their storage clusters, RHCS 5 does not require mastering the internal details of the RADOS distributed system, further lowering the learning curve to SDS adoption. Operators can manage storage volumes, create users, monitor performance and even assign fine-grained permissions using role-based access controls (RBAC). Power users can operate clusters from the command line just as before, but with additional delegation options available for their junior administrators, combining the best of both worlds.

The new Cephadm integrated cluster management system automates ceph lifecycle operations including scale-out (and down), as well as install and upgrade operations. Embedding Ceph-aware automation in the product reduces the learning curve to building a distributed storage cluster, requiring no Ceph experience whatsoever on the part of those deploying a cluster for the first time. While carefully selecting production hardware from a validated reference architecture remains necessary to ensure a good balance of economics and performance, the new installer significantly lowers the bar to deploying a Ceph cluster for hands-on evaluation, as DevOps scripting expertise is no longer required.

Further extending the Ceph manageability, we are declaring the management API stable with version 5.0. Any automation designed to consume it will be supported for the full five-year lifecycle of a major release without requiring significant changes, delivering our customer’s most voiced request since the introduction of the management dashboard itself.

Data Reduction and Performance #

This new release rounds out data reduction options available to customers, spanning replica 3 HDD, replica 2 SSD, erasure coding for object, block, and file access, as well as native back-end compression. These choices are not exclusive, with storage pools offering different cost-to-performance ratios and enabling operators to match a workload with its ideal environment without pre-allocating storage capacity. A dramatic four-fold reduction of the space consumed by very small files on solid state devices (and sixteen-fold on rotating media!) rounds out the improvements in use of storage space.

Performance improvements are extensive, and we are limited to calling out the highlights here. A systematic overhaul of block-storage caches is the most visible result of joint work with Intel.

A new, larger client-side read-only cache offloads reads of immutable objects from the Ceph cluster proper in use cases like accessing virtual machine master images in OpenStack environments, benefitting gold image workflows. Paired with a write-around cache that is no longer used to serve reads but only to batch writes (the reads are served by the OS page cache), and broad testing and optimization data access code paths, the entire libRBD stack has been tuned to deliver improved performance. From a user’s perspective, this is of particular interest to all solid state clusters operators, and brings LibRBD performance closer to kRBD’s.

A specially designed write-back cache optimized for Intel’s Optane hardware is being introduced with RHCS 5, providing a caching option for hypervisors with the potential to dramatically improve latency times for certain workloads. Data flowing from a client is written to an Optane drive co-located on the hypervisor node hosting the VM. A background, asynchronous write-back process then persists the data to the Ceph cluster. Bluestore v2 brings a collection of internal backend enhancements improving performance and memory efficiency to what has successfully transitioned to become our default backend.

Not to be outdone, the RGW team has recently clocked a new milestone at 10-billion objects.

Security #

RHCS 5 also delivers marked improvements in security posture with full support for installing in FIPS-compliant environments, enabling its use in highly secure FedRAMP certified deployments in combination with OpenStack or standalone. S3 Object Lock API support adds write-once, read-many (WORM) semantics to our award-winning object store, which begins its path towards certification with this release. The object store gateway is also adding support for multiple key and secret management back ends, including Hashicorp Vault, OpenStack Barbican, and IBM Security Key Lifecycle Manager (SKLM). The new Messenger 2.1 backend protocol supports optional full encryption on the private replication network for regulatory environments where all traffic has to be encrypted, even on isolated networks.

As always, those of you with an insatiable thirst for detail should read the release notes next—and feel free to ping me on Twitter if you have any questions!

We’re Always Ready to Support You #

Red Hat Ceph Storage 5.0 was delivered with our longest maintenance cycle yet, a 71-month support lifecycle right from the start, thanks to a record 35-month extension optionally available through Red Hat’s ELS program. As always, if you need technical assistance, our Global Support team is but one click away using the Red Hat support portal.